LLM Cost Reduction: The Hidden 30 Percent You Can Save with Prompt Optimization

Dec 2, 2025

TL;DR

Most companies are quietly burning ~30% of their LLM budget on bloated prompts, overkill models & zero cost guardrails. Clean up your prompts, route simple tasks to cheaper models and add basic caps/monitoring, and you turn “Big AI Invoices” into predictable, optimized spend.

Tools like Dakora help you do this on autopilot by treating prompts and LLM usage like real production infrastructure, not throwaway strings.

Imagine this.

Your team ships a successful LLM feature. Usage grows, everyone is happy, but then the finance team pings you:

“Hey, did you know we just spent six figures on ‘AI tokens’ in the last quarter?”

Nothing is technically broken. The product works. But your AI bill is quietly eating a big chunk of your budget.

If that feels uncomfortably familiar, you are not alone. AI and LLM spend is exploding across US and EU companies, and a surprising amount of that spend is simply… waste.

The good news: a lot of that waste lives in one place you can control. Your prompts and how you use models.

This post is about turning prompt chaos into a real FinOps lever, and how a platform like Dakora can help you capture those savings, not just once, but continuously.

AI spending is booming. Cost pressure is coming.

AI is not a side project anymore.

A recent Bain survey found that enterprise generative AI budgets doubled in 2024, now averaging about 10 million dollars per year for larger programs, and 60% of those initiatives are already moving into regular IT budget cycles. (Bain)

Another report on enterprise LLM adoption showed that 72% of organizations expect their LLM spending to increase in 2025, with more than a third already spending over 250,000 dollars per year just on LLM APIs. (Stanford HAI)

Zoom out even further. The Stanford AI Index estimates that US private AI investment hit 109 billion dollars in 2024, with generative AI pulling in a fast growing share of that money. (Stanford HAI)

Budgets are going up fast.

AI usage is moving from pilots into core products.

LLM line items on the cloud bill are no longer “noise”.

That is the macro picture. The micro picture, inside a single company, looks like this:

Different teams spin up separate LLM experiments.

Prompts get copied, tweaked, and hardcoded everywhere.

Cost tracking, if it exists at all, is a spreadsheet updated at the end of the month.

And then someone has to explain why the OpenAI or Azure bill just tripled.

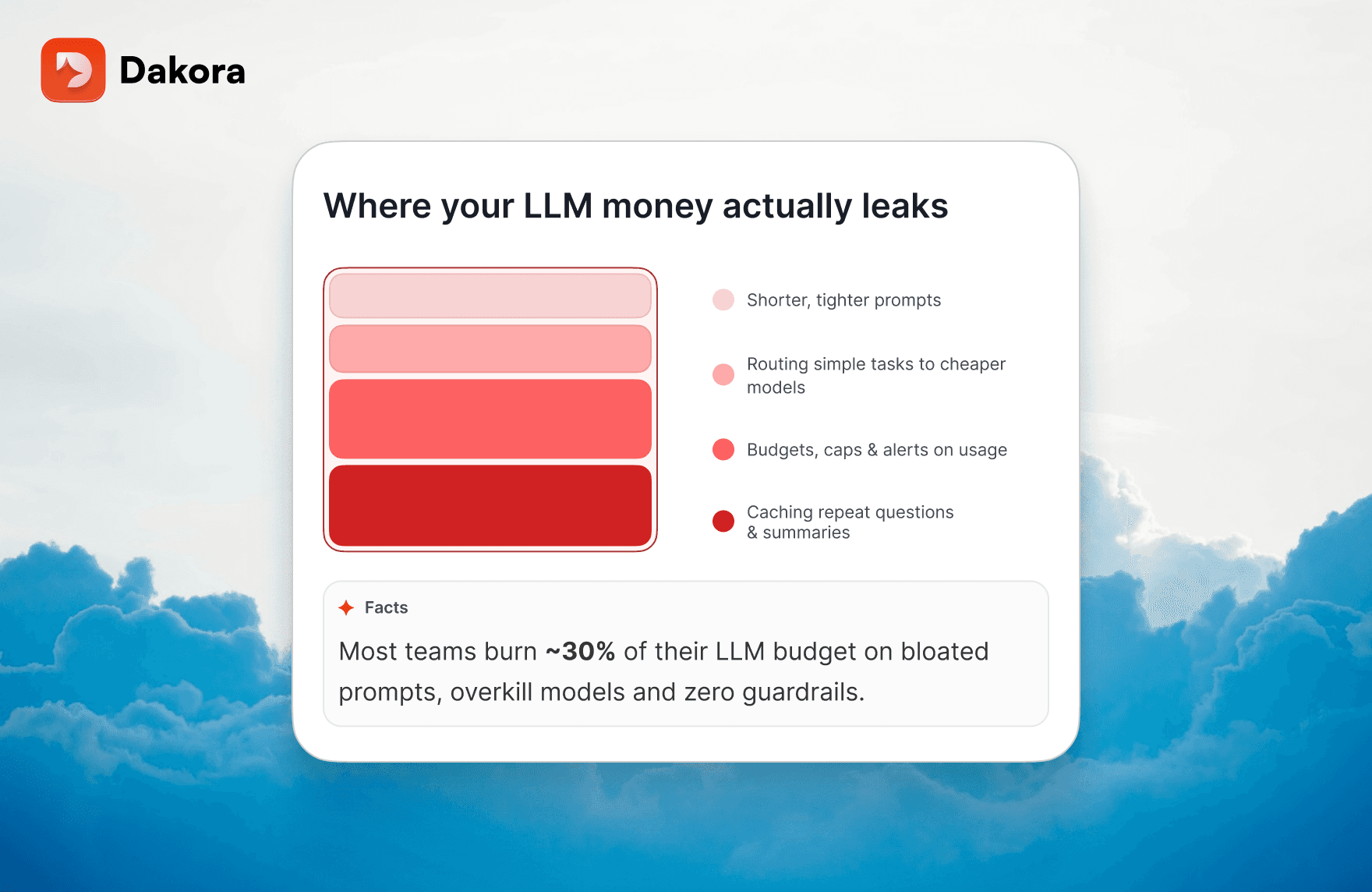

Problem | Average Waste | Fix | Impact |

|---|---|---|---|

Bloated prompts | 10% | Compression | Lower tokens |

Wrong model | 8% | Smaller models | Cost −50% |

No caching | 6% | Response cache | Save on repeats |

Missing budgets | 4% | Caps + alerts | Avoid spikes |

Where the money leaks: prompt chaos 101

Most teams do not overspend on LLMs because the models are inherently too expensive. They overspend because nobody is managing how those models are being used.

Here are the most common leaks.

1. Overly verbose prompts

Prompts grow organically. Someone adds more instructions “just to be safe”, another person adds three extra examples, and suddenly your system message is 1,500 tokens long. Every request now costs more, for no noticeable gain.

Best practice guides show that by cutting fluff and using precise instructions, you can reduce token count by 30% - 50% without losing quality. (MIT Sloan)

2. Stuffing the context window

Retrieval augmented generation is great, until you send the whole knowledge base into every request. Large context windows are powerful, but they are also expensive.

A fresh study on RAG showed that simple text preprocessing (like stopword removal) cut input token costs by 30 percent while preserving more than 95 percent of semantic quality.

3. Using top shelf models for every task

It is tempting to point everything at the “best” model. But if you are using a premium model for quick classifications that a smaller model could handle, you are just burning money.

Several model routing case studies show that sending routine tasks to cheaper models, and reserving bigger models only for complex work, can cut inference costs by 40 percent or more.

4. No caching, no reuse

If your app answers the same question a hundred times, and you pay a hundred times for it, that is on you. A simple cache for frequent queries and summaries can produce immediate savings in the 15% - 40% percent range, especially for high traffic endpoints.

5. No guardrails on usage

A bug in an agent loop, or an accidentally unbounded background job, can easily trigger thousands of API calls before anyone notices. Real world incidents with several thousand dollars lost to a single bug are common enough that they are now a known category of risk.

Put together, these patterns explain why many teams report that 20% - 50% percent of their LLM token spend brings little or no business value.

Prompt optimization as a FinOps lever

If you treat prompts and model usage as a managed asset, you can often cut LLM costs by roughly 30 percent without hurting quality.

This is not wishful thinking. Multiple sources converge on this range:

A cost optimization guide from Koombea notes that businesses can reduce LLM costs by up to 80 percent in extreme cases, and that token usage optimization and prompt engineering are the fastest ways to get immediate savings. (Cornell University)

Technical write ups on token optimization show that trimming and tightening prompts delivers 30 to 50 percent fewer tokens in many real deployments.

Newer cost optimization playbooks marketed to product and engineering teams even frame “cut LLM costs by 30 percent” as the baseline outcome when you combine prompt optimization, caching and model routing.

Thirty percent is not a magical number, but it is a realistic target that appears again and again when teams start to optimize. Not by doing anything exotic. Mostly by:

Shortening prompts.

Sending the right task to the right model.

Avoiding duplicate or useless calls.

Putting basic guards on budgets.

So why is everyone not doing this already?

Because in most organizations, prompts and LLM calls are still handled like experimental glue code, not like something that needs lifecycle management.

Signs you are in prompt chaos

If you are a CTO, Head of Data, or AI lead, some of these might ring a bit too true:

Nobody can answer the question “Which team generated last month’s LLM bill?” with precision.

Prompts live in five different repos, plus some are copy pasted from Slack.

You fix a bad answer by editing a prompt directly in production, hoping nothing else breaks.

Debugging a weird LLM output feels like trying to reproduce a ghost.

Finance asks for an AI cost forecast, and your first reaction is to open a spreadsheet and guess.

Under the hood, this usually maps to three missing capabilities.

1. No real observability

There is no clean trace of what the AI actually did. In multi step or agentic workflows, teams lack deep visibility into prompts, tool calls and decisions, so they learn about issues after they hit users or budgets.

2. No prompt lifecycle management

Prompts are edited like config strings, often directly in production, with no version control or rollback. Regression risk is high and A or B testing new prompts is painful.

3. No built in cost control

Most systems do not have spend limits, automated stop conditions, or alerts for abnormal usage. Cost spikes from bugs or unexpected traffic are detected only after the bill arrives.

You cannot fix what you cannot see. And you cannot run serious FinOps on AI if prompts and costs are invisible.

What “world class” LLM operations look like

The teams that run LLMs well in production tend to share a few habits:

They have dashboards that show token usage and cost by service, team and environment, often in close to real time.

They treat prompts like code: central repository, version control, approvals, rollback.

They use model routing so that cheaper models handle routine tasks and premium models are reserved for hard problems, which yields large cost cuts without hurting experience.

They implement caching for repeated questions and longer lived summaries.

They define budgets and alerts per product or per customer, so no feature can accidentally eat the entire AI budget.

In other words, they have turned what used to be ad hoc prompt tweaking into a discipline: Prompt FinOps.

You do not get there with a single script or dashboard. You need proper tooling that sits between your application code and the underlying LLMs.

This is where a platform like Dakora comes in.

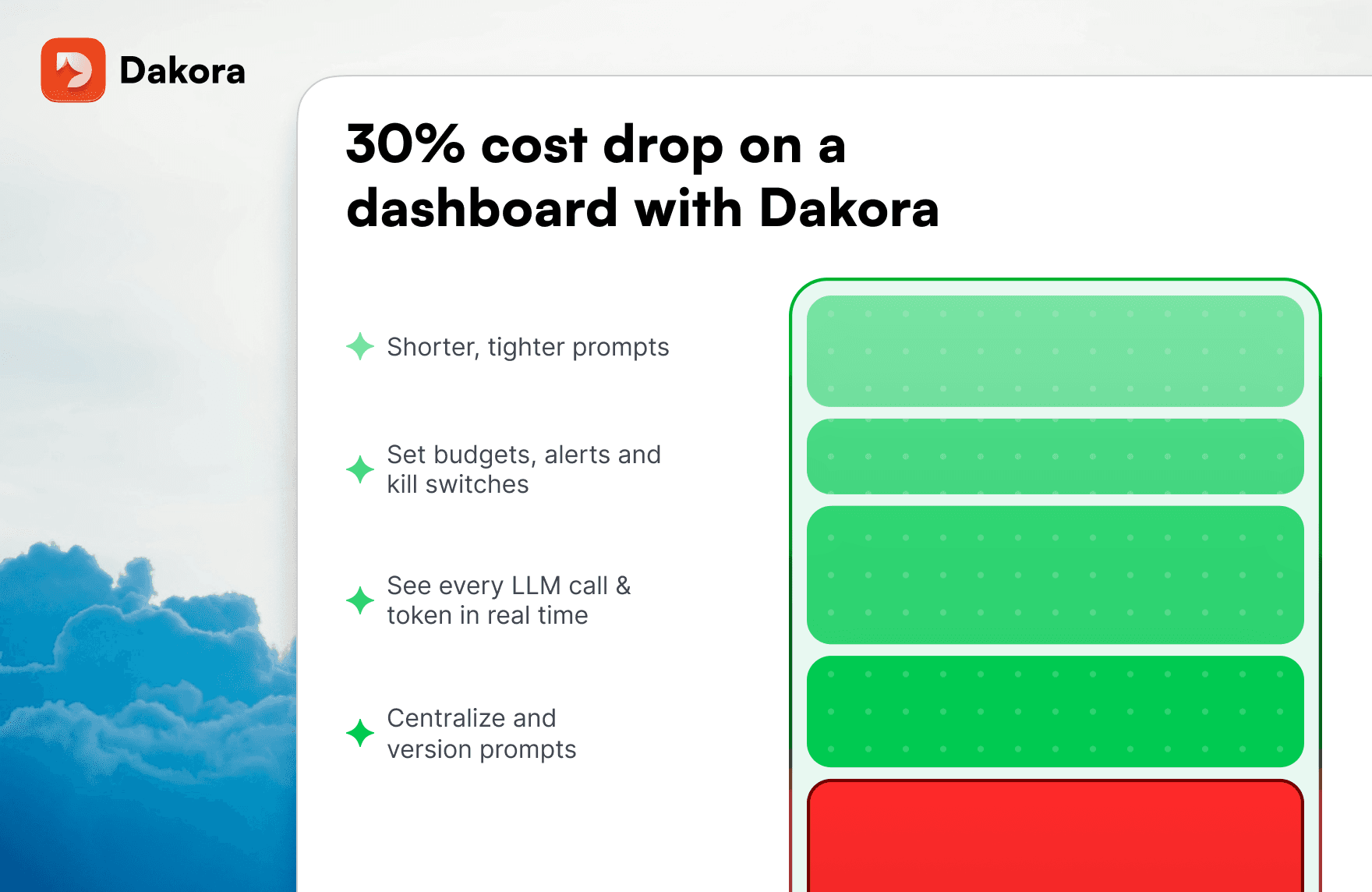

Dakora: turning prompt optimization into an ongoing practice

It is a production platform for LLM applications, initially designed around Microsoft Agent Framework, that puts prompt management, observability and cost control in one place instead of forcing you to stitch together multiple tools.

At a high level, Dakora gives you three things you do not get from a raw API.

1. Prompts as first class, versioned objects

In Dakora, prompts live in a central library:

You have version control with one click rollback, so you can safely experiment and revert if a change misbehaves.

You can create reusable templates with typed inputs instead of copy pasting strings around.

You get live reload, so you can update prompts without redeploying your application, which makes it much easier to A or B test and respond quickly to issues.

This alone moves you from “prompt chaos” to “prompt lifecycle”.

2. Deep observability into agents and LLM calls

Dakora plugs into your agent or LLM stack as middleware and captures:

So when something odd happens, you can scroll through the trace, see which prompt and which inputs led to it, and fix the right thing. Debugging becomes concrete, not guesswork.

3. Real time cost tracking and guardrails

Because every call is tracked, Dakora can give you:

Real time token usage and cost dashboards for each project.

Automatic spending caps with kill switches, so you can stop a runaway process before it burns through your budget.

This is where the 30 percent cost hypothesis turns into something measurable. You can:

Shorten a prompt, ship it with live reload.

Watch cost per request drop in the dashboard.

Confirm that quality stayed stable in your own metrics.

Do that across your top workflows, add caching and model routing, and suddenly that 30 percent reduction in LLM spend is not a slide in a deck, it is a graph in your monitoring tool.

A simple mental model for CTOs

If you are responsible for AI strategy, here is a simple way to think about it.

You are probably going to increase AI spend in the next 12 months anyway. Surveys show that most organizations expect double digit budget increases for generative AI, and many large programs have already doubled their budgets in 2024 alone. (Bain)

You have two options:

Let that spend grow in a fragmented, opaque way.

Prompts scattered.

Costs tracked after the fact.

Bugs and loops discovered from the invoice.

Make prompt and model usage a managed surface, just like you did with infrastructure a decade ago.

Centralize prompts and version them.

Instrument everything, from tokens to latency.

Put budgets, limits and alerts around it.

Option 2 does not stop you from experimenting. It gives you the visibility and guardrails to keep experimenting as usage grows, without your budget or your team burning out.

Closing thoughts: prompts are the new code

LLMs are not going away. US and EU companies are racing to build AI into their products and operations, and budgets are following that curve. (Stanford HAI)

The question is not “Should we use AI?” anymore. It is “Can we use AI in a way that is sustainable, observable and financially sane?”.

If you take one thing from this post, let it be this:

Prompts and model usage are not an afterthought. They are one of the biggest levers you have on AI cost and reliability.

Treat them with the same respect you give to your code and your infrastructure:

Clean them up.

Measure them.

Put them under control.

And if you do not want to build all the plumbing yourself, that is exactly the problem Dakora is trying to solve: a production platform that helps you ship LLM apps with prompt management, real time observability and cost control baked in from day one.